Moving from Vagrant to Docker can be a daunting idea. I’ve personally been putting it off for a long time, but since I discovered that Docker had released a “native” OS X client I decided it was finally time to give it a go. I’ve been using Vagrant for years to spin up a unique development environment for each of the client projects that I work on and it works very well, but does have some shortcomings that I was hoping that Docker would alleviate. I’ll tell you now, the transition to Docker was not as difficult as I had built it up to be in my mind.

Let’s start off with the basics of Docker and how it differs from Vagrant. Docker is a container based solution, where you build individual containers for each of the services you require for your application. What does this mean practically? Well, if you’re familiar with Vagrant you will know that Vagrant helps you create one large monolithic VM and installs and configures (through configuration management tools like Puppet or Chef) everything that your Application needs. This means that for each project, you have a full stack VM running which is very resource intensive. Docker on the other hand can run only the services you need by utilizing containers.

Docker Containers

So what are Docker containers? Well, if we’re developing a PHP application, there’s a few things that we will need. We need an application server to run PHP, a web server (like Apache or nginx) to serve our code, and a database server to run our MySQL instance. In Vagrant, I would have built an Ubuntu VM and had Puppet install and configure these services on that machine. Docker allows you to separate those services and run each service in its own container which is much more lightweight than a full VM. Docker then provides networking between those containers to allow them to talk to each other.

NOTE: In my example below I’m going to combine the PHP service and the Apache service into one container for simplicity and since logically there isn’t a compelling reason to separate them.

One Host to Rule Them All

At first running multiple containers seems like it would be MORE resource intensive than Vagrant, which only runs a single VM. In my example, I’m now running multiple containers where I only had to run a single Vagrant VM… how is this a better solution? Well, the way that Docker implements its containers makes it much more efficient than an entire VM.

Docker at its heart runs on a single, very slimmed down host machine (on OS X). For the purpose of this article, you can think of Docker as a VM running on your machine and each container that you instantiate runs on the VM and gets its own sandbox to access necessary resources and separate it from other processes. This is a very simplistic explanation of how Docker works and if you’re interested in a more in-depth explanation, Docker provides a fairly thorough overview here: https://docs.docker.com/engine/understanding-docker/

Docker Images

Now that we know what Docker containers are, we need to understand how they’re created. As you may have guessed from the header above, you create containers using Docker images. A Docker image is defined in a file called a ‘Dockerfile’, which is very similar in function to a Vagrantfile. The Dockerfile simply defines what your Image should do. Similar to how an Object is an instance of a Class, a Docker Container is an instance of a Docker Image. Like an object, Docker Images are also extensible and re-usable. So a single MySQL image can be used to spin up database service containers on 5 different projects.

You can create your own Docker Images from scratch or you can use and extend any of the thousands of images available at https://hub.docker.com/

Image Extensibility

As I noted above, Docker Images are extensible, meaning that you can use an existing image and add your own customizations on top of it. In the example below, I found an image on the Docker Hub ‘eboraas/apache-php’ that was very close to what I needed with just a couple tweaks. One of the big advantages of Docker is that you are able to pull an image and extend it to make your own customizations. This means that if the base image changes, you will automatically get those changes next time you run your docker image without further action on your part.

Docker Compose

When you install Docker on OS X, you’ll get a tool called Docker Compose. Docker compose is a tool for defining and running applications with multiple Docker containers. So instead of having to individually start all of your containers on the command line each with their own parameters, it allows you to define those instructions in a YAML file and run one command to bring up all the containers.

Docker Compose is also what will allow your Docker containers to talk to each other. After all, once your web server container is up and running it will need to talk to your database server which lives in its own container. Docker Compose will create a network for all your containers to join so that they have a way to communicate with each other. You will see an example of this in our setup below.

Docker Development Application Setup

All of this Docker stuff sounds pretty cool, right? So let’s see a practical example of setting up a typical PHP development environment using Docker. This is a real world example of how I set up my local dev environment for a new client with an existing code base.

Install Docker

The first thing you’re going to want to do is install Docker. I’m not going to walk through all the steps here as Docker provides a perfectly good guide. Just follow along the steps here: https://docs.docker.com/docker-for-mac/

Now that you’ve got Docker installed and running, we can go ahead and open up a terminal and start creating the containers that we’ll need!

MySQL Container

Now, typically I would start with my web server and once that is up and running I would worry about my database. In this case the database is going to be simpler (since I’ll need to do some tweaking on the web server image) so we’ll start with the easier one and work our way up. I’m going to use the official MySQL image from the Docker Hub: https://hub.docker.com/_/mysql/

You can get this image by running:

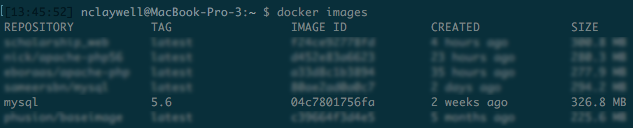

docker pull mysqlAfter pulling the mysql image you should be able to type ‘docker images’ and it will show up in the list:

Now we pulled the image to our local machine and we can then run it with this command:

docker run mysqlThis will create a container from the image with no configuration options at all, just a vanilla MySQL server instance. This is not super useful for us, so let’s go ahead and `Ctrl + C` to stop that container and we’ll take it one step further with this command:

docker run -p 3306:3306 --name my-mysql -e MYSQL_ROOT_PASSWORD=1234 -d mysql:5.6We’re now passing in a handful of optional parameters to our run command which do the following:

- `-p 3306:3306` – This option is for port forwarding. We’re telling the container to forward its port 3306 to port 3306 on our local machine (so that we can access mysql locally).

- `–name my-mysql` – This is telling Docker what to name the container. If you do not provide this, Docker will just assign a randomly generated name which can be hard to remember/type (like when I first did this and it named my container `determined_ptolemy`)

- `-e MYSQL_ROOT_PASSWORD=1234` – Here we are setting an Environment variable, in this case that the root password for the MySQL server should be ‘1234’.

- `-d` – This option tells Docker to background the container, so that it doesn’t sit in the foreground of your terminal window.

- `mysql:5.6` – This is the image that we want to use, with a specified tag. In this case I want version 5.6 so I specified it here. If no tag is specified it will just use latest.

After you’ve run this command, you can run ‘docker ps’ and it will show you the list of your running containers (if you do ‘docker ps –a’ instead, it will show all containers – not just running ones).

Your Alternative in Docker Composer

This is kind of a clunky command to have to remember and type every time you want to bring up your MySQL instance. In addition, bringing up the container in this way forwards the 3306 port to your local machine, but doesn’t give it an interface to interact with other containers. But no need to worry this is where Docker Compose is going to come in handy.

For now, let’s just stop and remove our container and we’ll use it again later with docker-compose. The following commands will stop and remove the container you just created (but the image will not be deleted):

docker stop my-mysqldocker rm my-mysqlNOTE: Explicitly pulling the image is not required, you can simply do docker run mysql and it will pull the image and then run it, but we’re explicitly pulling just for the purpose of demonstration.

Apache/PHP Container

I’ve searched on https://hub.docker.com and found a suitable Apache image that also happens to include PHP: https://hub.docker.com/r/eboraas/apache-php/. Two birds with one stone, great!

Now, this image is very close to what I need but there’s a couple of things missing that my application requires. First of all, I need the php5-mcrypt extension installed. This application also has an ‘.htaccess’ file that does URL rewriting, so I need to set ‘AllowOverride All’ in the Apache config.

I’m going to create my own image that extends the ‘eboraas/apache-php’ image and makes those couple changes. To create your own image, you’ll need to first create a Dockerfile. In the root of your project go ahead and create a file named ‘Dockerfile’ and insert this content:

FROM eboraas/apache-php

COPY docker-config/allowoverride.conf /etc/apache2/conf.d/

RUN apt-get update && apt-get -y install php5-mcrypt && apt-get clean && rm -rf /var/lib/apt/lists/*Let’s go through this line-by-line:

- We use ‘FROM’ to denote what image we are extending. Docker will use this as the base image and add on our other commands

- Tells Docker to ‘COPY’ the ‘docker-config/allowoverride.conf’ file from my local machine to ‘/etc/apache2/conf.d’ in the container

- Uses ‘RUN’ to run a command in the container that updates apt and installs php5-mcrypt and then cleans up after itself.

Before this will work, we need to actually create the file we referred to in line 2 of the Dockerfile. So create a folder named ‘docker-config’ and a file inside of that folder called ‘allowoverride.conf’ with this content:

<Directory "/var/www/html">

AllowOverride All

</Directory>The following commands do not need to be executed for this tutorial, they are just for example! If you do run them, just be sure to stop the container and remove it before moving on.

At this point, we could build and run our customized image:

docker build -t nick/apache-php56 .This will build the image described in our Dockerfile and name it ‘nick/apache-php56’. We could then run our custom image with:

docker run -p 8080:80 -p 8443:443 -v /my/project/dir/:/var/www/html/ -d nick/apache-php56The only new tag in this is:

- `-v /my/project/dir/:/var/www/html/` – This is to sync a volume to the container. This will sync the /my/project/dir on the local machine to /var/www/html on the container.

Docker Compose

Instead of doing the complicated ‘docker run […]’ commands manually, we’re going to go ahead and automate the process so that we can bring up all of our application containers with one simple command! The command that I’m referring to is ‘docker-compose’, and it gives you a way to take all of those parameters that we tacked onto the ‘docker run’ command and put them into a YAML configuration file. Let’s dive in.

Create a file called ‘docker-compose.yml’ (on the same level as your Dockerfile) and insert this content:

version: '2'

services:

web:

build: .

container_name: my-web

ports:

- "8080:80"

- "8443:443"

volumes:

- .:/var/www/html

links:

- mysql

mysql:

image: mysql:5.6

container_name: my-mysql

ports:

- "3306:3306"

environment:

MYSQL_ROOT_PASSWORD: 1234This YAML config defines two different containers and all of the parameters that we want when they’re run. The ‘web’ container tells it to ‘build: .’, which will cause it to look for our Dockerfile and then build the custom image that we made earlier. Then when it creates the container it will forward our ports for us and link our local directory to ‘/var/www/html’ on the container. The ‘mysql’ container doesn’t get built, it just refers to the image that we pulled earlier from the Docker Hub, but it still sets all of the parameters for us.

Once this file is created you can bring up all your containers with:

docker-compose up -dUsing Your Environment

If you’ve followed along, you should be able to run `docker ps` and see both of your containers up and running. Since we forwarded port 80 on our web container to port 8080 locally, we can visit ‘http://localhost:8080’ in our browser and be served the index.php file that is located in the same directory as the docker-compose.yml file.

I can also connect to the MySQL server from my local machine (since we forwarded port 3306) by using my local MySQL client:

ysql –h 127.0.0.1 –u root –p1234NOTE: You have to use the loopback address instead of localhost to avoid socket errors.

Talking to a MySQL Server

But how do we configure our web application to talk to the MySQL server? This is one of the beautiful things about docker-compose. In the ‘docker-compose.yml’ file you can see that we defined two things under services: mysql and web. By default, docker-compose will create a single network for the app defined in your YAML file. Each container defined under a service joins the default network and is reachable and discoverable by other containers on the network. When we defined ‘mysql’ and ‘web’ as services, docker-compose created the containers and had them join the same network under the hostnames ‘mysql’ and ‘web’. So in my web application’s config file where I define the database connection parameters, I can do the following:

define('DB_DRIVER', 'mysqli');

define('DB_HOSTNAME', 'mysql');

define('DB_USERNAME', 'root');

define('DB_PASSWORD', '1234');

define('DB_DATABASE', 'dbname');As you can see, all I have to put for my hostname is ‘mysql’ since that is what the database container is named on the Docker network that both containers are connected to.

Vagrant to Docker Comparison Conclusions

Now I’ll circle back to my original comparison of Vagrant to Docker. In my experience with Docker, I believe it to be better than Vagrant.

Docker uses fewer resources. Compared to running a full stack VM, these containers are so lightweight that I can actually feel the performance difference.

Docker is faster to spin up environments. Doing a ‘vagrant up –provision‘ for the first time would often take in excess of 15 minutes to complete whereas the ‘docker-compose up -d‘ that we just ran took a matter of seconds.

Docker is easier to configure. What would have taken me a long time to write ruby scripts (or generate them with Puphpet) for Vagrant took no time at all to extend a Docker image and add on a few simple commands.

Hopefully this article was helpful for you in exploring what Docker has to offer. Docker also has extensive and detailed documentation available online at: https://docs.docker.com/

If you still don’t feel ready to dive right in, it may be helpful to run through the “Get Started with Docker” tutorial that Docker provides: https://docs.docker.com/engine/getstarted/

Vagrant can work with Docker https://www.vagrantup.com/docs/docker/basics.html .

Yes it can! If I were trying to exactly match a production environment that uses Docker containers, then running Docker as a Vagrant provider would be an excellent solution.

My point, however, is that I don’t need to use Vagrant. Using Docker as a provider for Vagrant means that Vagrant needs to spin up a VM to run the Docker daemon in. Alternatively, I could just skip the Vagrant VM and have Docker run natively on my OS as I do in the article above.

Thank you for nice article and comment.